Workshop Co-Organizers: Prof. Caroline Trippel (Stanford) and Prof. Heiner Litz (UC Santa Cruz).

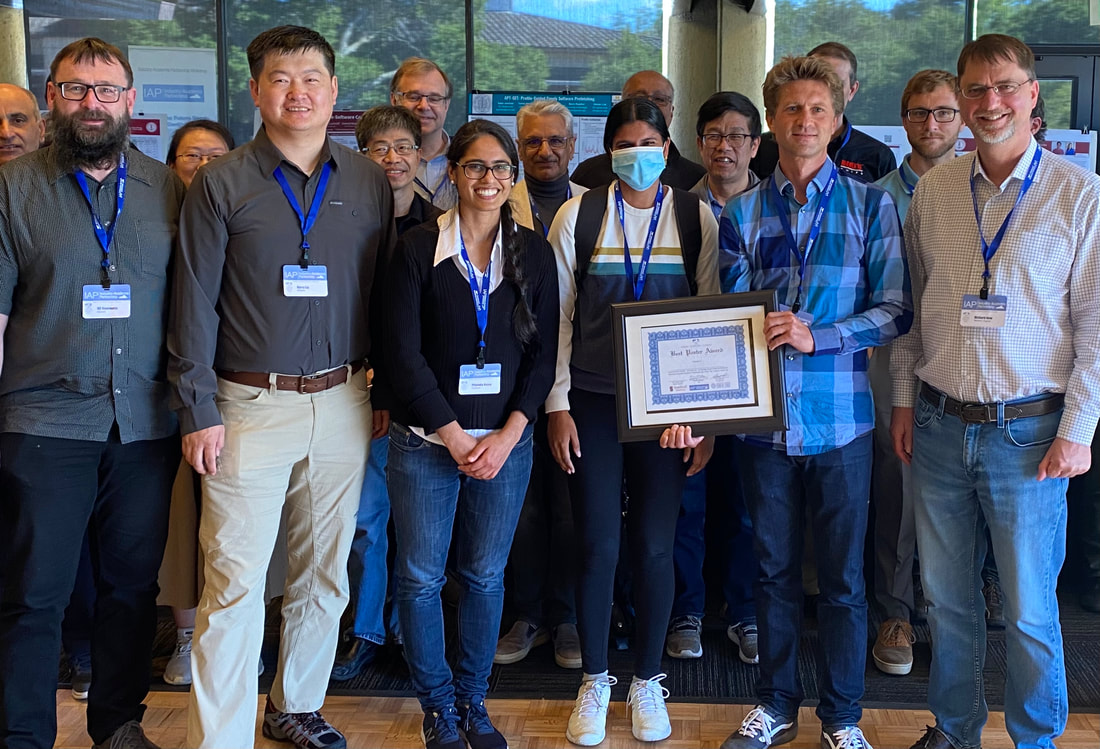

Stanford PhD student Kavya Sreedhar receives the Best Poster Award for "A Fast Large-Integer Extended GCD Algorithm and Hardware Design for Verifiable Delay Functions and Modular Inversion."

Left to right (front row) are Dr. Ulf Hanebutte (Marvell), Dr. Harry Liu (Alibaba), Prof. Priyanka Raina (Stanford), Kavya, Prof. Heiner Litz (UCSC), and Dr. Richard New (Western Digital). Behind Kavya are Dr. Pankaj Mehra (Elephance) and Dr. Jimmy Wu (Futurewei).

Left to right (front row) are Dr. Ulf Hanebutte (Marvell), Dr. Harry Liu (Alibaba), Prof. Priyanka Raina (Stanford), Kavya, Prof. Heiner Litz (UCSC), and Dr. Richard New (Western Digital). Behind Kavya are Dr. Pankaj Mehra (Elephance) and Dr. Jimmy Wu (Futurewei).

Attendees Gather for a Group Photo at the End of the Day.

AGENDA - Videos of Presentations

8:30-8:55 – Badge Pick-up – Coffee/Tea and Breakfast Food/Snacks

8:55-9:00 – Welcome – Prof. Caroline Trippel and Prof. Heiner Litz

9:00-9:30 – Dr. Partha Ranganathan, Google, VP and Engineering Fellow, "The Forecast: Cloudy with a Chance of ML"

9:30-10:00 – Dr. Gilles Pokam, Intel, Principal Engineer, “Emerging Server Architecture Frontiers at Hyperscale”

10:00-10:30 – Dr. Niket Agarwal, Meta, Principal Software Engineer, “Large Scale Storage and Data Ingestion for ML Training"

10:30-11:00 – Prof. Andrew Quinn, UCSC, “Data-Centric Debugging or: How I Learned to Stop Worrying and Use 'Big-Data' Techniques to Diagnose Software Bugs”

11-11:30 – Lightning Round for Student Posters

11:30-12:30 – Lunch and Poster Viewing

12:30-1:00 – Prof. Peter Alvaro, UCSC, “What not Where: Sharing in a World of Distributed, Persistent Memory”

1:00-1:30 – Dr. Richard New, Western Digital, VP of Research, “Innovations in Storage Technology from Device Physics Through the Software Stack”

1:30-2:00 – Prof. Heiner Litz, UCSC, “Unblocking Performance Bottlenecks in Data Center Workloads”

2:00-2:30 – Dr. Ulf Hanebutte, Marvell, Performance Architect, “Accelerating ML Anomaly Detection Models for Networking in the Datacenter”

2:30-3:00 – Break

3:00-3:30 – Dr. Harry Liu, Alibaba, Director of Network R&D, “The Hyper-Converged Programmable Gateway in Alibaba's Edge Cloud”

3:30-4:00 – Prof. Priyanka Raina, Stanford, “AHA: An Agile Approach to the Design of Coarse-Grained Reconfigurable Accelerators and Compilers”

4:00-4:15 – Best Poster Award & Reception

ABSTRACTS and BIOS (listed in order of appearance)

Dr. Partha Ranganathan, Google, VP and Engineering Fellow, "The Forecast: Cloudy with a Chance of ML"

Abstract: Growing volumes of data, smarter edge devices, and new, diverse workloads are causing demand for computing to grow at phenomenal rates. At the same time, Moore's law is slowing down, stressing traditional assumptions around cheaper and faster systems every year. How do you respond to the current opportunities, exponentially increasing compute capacity at a fixed cost? Specifically, we will discuss the innovations and trends shaping the future computing landscape -- more “out-of-the-box” designs that consider the entire datacenter as a computer for custom silicon and software-defined infrastructure, broader open innovation ecosystems, and a whole lot of machine learning (ML) --- all delivered in the cloud. We will conclude with a discussion of opportunities for future innovation and industry-academia partnership on systems for cloud computing.

Bio: Partha Ranganathan is currently a VP, technical Fellow at Google where he is the area technical lead for hardware and datacenters, designing systems at scale. Prior to this, he was a HP Fellow and Chief Technologist at Hewlett Packard Labs where he led their research on systems and data centers. Partha has worked on several interdisciplinary systems projects with broad impact on both academia and industry, including widely-used innovations in energy-aware user interfaces, heterogeneous multi-cores, power-efficient servers, accelerators, and disaggregated and data-centric data centers. He has published extensively (including being the co-author on the popular "Datacenter as a Computer" textbook), is a co-inventor on more than 100 patents, and has been recognized with numerous awards. He has been named a top-15 enterprise technology rock star by Business Insider, one of the top 35 young innovators in the world by MIT Tech Review, and is a recipient of the ACM SIGARCH Maurice Wilkes award, Rice University's Outstanding Young Engineering Alumni award, and the IIT Madras distinguished alumni award. He is also a Fellow of the IEEE and ACM, and is currently on the board of directors for OpenCompute.

Dr. Gilles Pokam, Intel, “Emerging Server Architecture Frontiers at Hyperscale”

Abstract: Today's hyperscale workloads, e.g., web services, are powered by modern data centers featuring 100s of thousands of servers. The compute demand of these data centers is expected to increase, essentially driven by growth in the user base and data, and increased SW complexity. These changes are already causing industry to rethink the architecture of the server that will power future hyperscale data centers. In this talk, we draw from frontiers in analyzing trends in hyperscale workloads to discuss some of the changes that's inspiring server architecture redesign.

Bio: Dr. Gilles Pokam is a Principal Engineer at Intel. Prior to joining Intel, Gilles was a researcher at IBM T.J. Watson Research Center in NY and a postdoc researcher at UCSD. His research focuses on microarchitecture and its interactions with Systems and Security. Dr. Gilles Pokam is currently leading work aiming at developing next generation frontend microarchitecture mechanisms to improve performance and security of emerging datacenter workloads. Dr. Gilles Pokam holds a PhD in Computer Science from INRIA (France) and has authored 40+ publications and 30+ patents in Architecture and Systems. He is the recipient of 2006 IEEE Top Pick Award and a member of MICRO Hall of Fames.

Dr. Niket Agarwal, Meta, "Large Scale Storage and Data Ingestion for ML Training"

Abstract: Meta's products like News Feed, Ads, IG Reels, language translation heavily rely on ML ranking and recommendation. Our ranking models consume massive datasets to continuously improve user experience on our platform. In this talk, we discuss our experience of building infrastructure to serve massive scale data to the 1000s of AI models driving our products. Further, we present AI training data pipeline workload characteristics and challenges in scaling these systems for industry-scale use cases as well as talk about HW/SW co-design optimizations we have done to these data ingestion pipelines. We will conclude with technical and research challenges in this space.

Bio: Niket Agarwal is a Principal Software Engineer in the Infrastructure team at Meta/Facebook. His interests and background include system architecture co-design across compute, storage and ML systems. Prior to Meta, Niket spent nearly a decade at Google building compute and storage systems that powered all of Google Infrastructure, including Google Cloud. Niket received his M.A and Ph.D. from Princeton University and BTech in Computer Science from Indian Institute of Technology Kharagpur.

Prof. Andrew Quinn, UCSC, “Data-Centric Debugging or: How I Learned to Stop Worrying and Use 'Big-Data' Techniques to Diagnose Software Bugs”

Abstract: Software bugs are pervasive and costly. As a result, developers spend the majority of their time debugging their software. Traditionally, debugging involves inspecting and tracking the runtime behavior of a program. Alas, program inspection is computationally expensive, especially when employing powerful techniques such as dynamic information flow tracking, data-race

detection, and data structure invariant checks. Moreover, to use current tools (e.g., gdb, Intel PIN), developers write complex debugging logic in a style that mirrors traditional software. So, debugging logic faces the same challenges as

traditional software, including concurrency, fault handling and dynamic memory. In general, specifying debugging logic can be as difficult as writing the program being debugged!

In this talk, I will describe a data-centric debugging framework that alleviates the performance and specification limitations of current debugging tools. The key idea is to treat debugging as a data-oriented task, in which debugging questions are conceptually expressed as queries over an execution of a program. I will discuss how this conceptual model enables massive-scale

parallelism to accelerate debugging (SledgeHammer OSDI’18), even those, such as information flow, that are "embarrassingly sequential" (JetStream OSDI’16). In addition, I will describe my recently published work on a relational model for decreasing the latency and complexity required when debugging our most pernicious software bugs (OmniTable OSDI’22).

Bio: Andrew Quinn is an assistant professor at the University of California, Santa Cruz. His research investigates systems, tools, techniques to make software more reliable. His dissertation focuses on solutions that help developers better understand their

software for tasks such as debugging, security forensics, and data provenance. In addition, he has recently been working on solutions to improve the reliability of applications that use emerging hardware, including persistent memory, heterogeneous systems, and edge computing, and new systems for production-grade deterministic record and replay.

Andrew graduated from the University of Michigan in 2021. While at Michigan, he received an NSF fellowship (2017) and a MSR fellowship (2017). Before Michigan, Andrew attended Dension University where received degrees in Computer Science and Mathematics. When not working, Andrew is likely wrestling with his dogs, enjoying a long run, or (a recent addition to the list) surfing.

Prof. Peter Alvaro, UCSC, “What not Where: Sharing in a World of Distributed, Persistent Memory”

Abstract: A world of distributed, persistent memory is on its way. Our programming models traditionally operate on short-lived data representations tied to ephemeral contexts such as processes or computers. In the limit, however, data lifetime is infinite compared to these transient actors. We discuss the implications for programming models raised by a world of large and potentially persistent distributed memories, including the need for explicit, context-free, invariant data references. We present a novel operating system that uses wisdom from both storage and distributed systems to center the programming model around data as the primary citizen, and reflect on the transformative potential of this change for infrastructure and applications of the future.

Bio: Peter Alvaro is an Associate Professor of Computer Science at the University of California Santa Cruz, where he leads the Disorderly Labs research group (disorderlylabs.github.io). His research focuses on using data-centric languages and analysis techniques to build and reason about data-intensive distributed systems, in order to make them scalable, predictable and robust to the failures and nondeterminism endemic to large-scale distribution. Peter earned his PhD at UC Berkeley, where he studied with Joseph M. Hellerstein. He is a recipient of the NSF CAREER Award, the Facebook Research Award, the USENIX ATC 2020 Best Presentation Award, the SIGMOD 2021 Distinguished PC Award, and the UCSC Excellence in Teaching Award.

Dr. Richard New, Vice President of Research, Western Digital, “Innovations in Storage Technology from Device Physics Through the Software Stack”

Abstract: Today's cloud infrastructure depends on an abundance of data stored primarily on HDDs and SSD, managed through layers of software within the framework of scaled-out distributed storage applications. While storage cost and performance have both scaled dramatically due to the introduction of new technologies at the device level, the basic protocols and mechanisms by which software talks to storage have been stable for decades. This talk will explore how recent changes in the physical storage media (HDDs and SSDs) are driving new storage interface protocols, and requiring changes in the storage stack up through to the software application layer for key software components in the cloud including file systems and databases. This talk will describe how two such technologies, Shingled Magnetic Recording (SMR) for HDDs and Zoned Namespace (ZNS) for SSDs, are driving drive new performance, reliability and cost efficiencies within the storage domain, but also require fundamental changes in the storage software layers. Finally, we'll look at where storage technologies are headed, and discuss briefly some further research opportunities for storage that we believe will be important in the next decade.

Bio: Richard New is the Vice President of Research at Western Digital where he oversees the company’s advanced research across a range of topics ranging from physical sciences to software architecture. Richard received a BS in Electrical Engineering from the University of Waterloo, and an MS and PhD in Electrical Engineering from Stanford University. Richard was born in Cambridge England and grew up in Waterloo Canada. He currently resides in Palo Alto with his family and enjoys working on robotics projects with his kids.

Prof. Heiner Litz, UCSC, “Unblocking Performance Bottlenecks in Data Center Workloads”

Abstract: Modern complex datacenter applications exhibit unique characteristics such as extensive data and instruction footprints, complex control flow, and hard-to-predict branches that are not adequately served by existing microprocessor architectures. In particular, these workloads exceed the capabilities of microprocessor structures such as the instruction cache, BTB, branch predictor, and data caches, causing significant degradation of performance and energy efficiency.

In my talk, I will provide a detailed characterization of datacenter applications, highlighting the importance of addressing frontend and backend performance issues. I will then introduce three new techniques to address these challenges, improving the branch predictor, data cache, and instruction scheduler. I will make the case for profile-guided optimizations that amortize overheads across the fleet and which have been successfully deployed at Google and Intel, serving millions of users daily.

Bio: Heiner Litz is Assistant Professor at the University of California, Santa Cruz working in the field of Computer Architecture and Systems. His research focuses on improving the performance, cost, and efficiency of data center systems. Heiner is the recipient of the NSF CAREER award, Intel's Outstanding Researcher award, Google's Faculty Award, and his work received the 2020 IEEE MICRO Top Pick award. Before joining UCSC, Heiner Litz was a researcher at Google and a postdoctoral research fellow at Stanford University with Prof. Christos Kozyrakis and David Cheriton. Dr. Litz received his Diplom and Ph.D. from the University of Mannheim, Germany, advised by Prof. Bruening.

Dr. Ulf Hanebutte, Marvell, “Accelerating ML Anomaly Detection Models for Networking in the Datacenter”

Abstract: AI-ML technologies are being widely deployed in a variety of applications but their use within networking itself has been quite limited. This talk will showcase a solution for high-rate anomaly detection based on a network providing smart statistics on a group of packets and a HW optimized autoencoder neural network that is derived from an ensemble of autoencoders.

Additionally, this talk will highlight the flexibility and performance of Marvell’s Machine Learning Inference Processor integrated within the upcoming OCTEON 10 DPU, which enables best-in-class inferencing directly in the data pipeline.

Bio: Dr. Ulf Hanebutte is a Sr. Principal Architect at Marvell with focus on HW/SW codesign for Machine Learning. In this role he has contributed to multiple generations of ML inference accelerator HW and their SW stacks. Collaborating and solving big problems together has marked his extensive career, both at the National Labs and in the private sector, with projects ranging from HPC at Exa-scale to IoT for energy efficient buildings. He holds a Ph.D. from Northwestern University and a Dipl. Ing. in Aero Space Engineering from the University of Stuttgart

Dr. Harry Liu, Alibaba, Director of Network R&D, “The Hyper-Converged Programmable Gateway in Alibaba's Edge Cloud”

Abstract: Edge cloud provides significant performance and cost advantages for emerging applications such as cloud gaming, video conferencing and AR/VR, etc. However, different from central clouds, edge cloud also faces tremendous challenges due to the limited resources, demands on high performance, and hardware heterogeneity. Alibaba solves these problems by introducing a hyper-converged gateway platform ”SNA” that provides the cloud network stack and network functions within the network rather than the hosts. SNA is a heterogeneous computing platform that merges network switching, network virtualization, and various network functions on top of programmable network ASICs, FPGAs, and CPUs. It has been deployed to support some multi-million-user products in Alibaba’s edge cloud. The key technical enabler of the rapid and safe deployment of the hyper-converged gateways running in SNA is our programmable network development platform “TaiX” which provides novel and practical programming abstractions, compilers, debuggers, testers, orchestrators, and operation tools.

Bio: Hongqiang "Harry" Liu is a Director of Network Research and Edge Network Infrastructure Engineering in Alibaba Cloud and Alibaba DAMO Academy. He received his Ph.D. degree from the Department of Computer Science at Yale University in 2014. His research focuses on data center networks, network transports, and programmable networks. He has published more than 20 papers in top-tier academic conferences, such as ACM SIGCOMM, ACM SOSP, and USENIX NSDI. He also serves on the technical program committees of SIGCOMM and NSDI. He is the recipient of the prestigious ACM SIGCOMM Doctoral Dissertation Award - Honorable Mention in 2015.

Prof. Priyanka Raina, Stanford, “AHA: An Agile Approach to the Design of Coarse-Grained Reconfigurable Accelerators and Compilers”

Abstract: With the slowing of Moore's law, computer architects have turned to domain-specific hardware specialization to continue improving the performance and efficiency of computing systems. However, specialization typically entails significant modifications to the software stack to properly leverage the updated hardware. The lack of a structured approach for updating both the compiler and the accelerator in tandem has impeded many attempts to systematize this procedure. We propose a new approach to enable flexible and evolvable domain-specific hardware specialization based on coarse-grained reconfigurable arrays (CGRAs). Our agile methodology employs a combination of new programming languages and formal methods to automatically generate the accelerator hardware and its compiler from a single source of truth. This enables the creation of design-space exploration frameworks that automatically generate accelerator architectures that approach the efficiencies of hand-designed accelerators, with a significantly lower design effort for both hardware and compiler generation. Our current system accelerates dense linear algebra applications, but is modular and can be extended to support other domains. Our methodology has the potential to significantly improve the productivity of hardware-software engineering teams and enable quicker customization and deployment of complex accelerator-rich computing systems.

Bio: Priyanka Raina is an Assistant Professor of Electrical Engineering at Stanford University. She received her B.Tech. in Electrical Engineering from IIT Delhi in 2011 and her S.M. and Ph.D. in Electrical Engineering and Computer Science from MIT in 2013 and 2018. Priyanka’s research is on creating high-performance and energy-efficient architectures for domain-specific hardware accelerators in existing and emerging technologies, and agile hardware-software co-design. Her research has won best paper awards at ESSCIRC and MICRO conferences and in the JSSC journal. She is also the recipient of Intel Rising Star Faculty Award, Hellman Faculty Scholar Award and is a Terman Faculty Fellow.

8:30-8:55 – Badge Pick-up – Coffee/Tea and Breakfast Food/Snacks

8:55-9:00 – Welcome – Prof. Caroline Trippel and Prof. Heiner Litz

9:00-9:30 – Dr. Partha Ranganathan, Google, VP and Engineering Fellow, "The Forecast: Cloudy with a Chance of ML"

9:30-10:00 – Dr. Gilles Pokam, Intel, Principal Engineer, “Emerging Server Architecture Frontiers at Hyperscale”

10:00-10:30 – Dr. Niket Agarwal, Meta, Principal Software Engineer, “Large Scale Storage and Data Ingestion for ML Training"

10:30-11:00 – Prof. Andrew Quinn, UCSC, “Data-Centric Debugging or: How I Learned to Stop Worrying and Use 'Big-Data' Techniques to Diagnose Software Bugs”

11-11:30 – Lightning Round for Student Posters

11:30-12:30 – Lunch and Poster Viewing

12:30-1:00 – Prof. Peter Alvaro, UCSC, “What not Where: Sharing in a World of Distributed, Persistent Memory”

1:00-1:30 – Dr. Richard New, Western Digital, VP of Research, “Innovations in Storage Technology from Device Physics Through the Software Stack”

1:30-2:00 – Prof. Heiner Litz, UCSC, “Unblocking Performance Bottlenecks in Data Center Workloads”

2:00-2:30 – Dr. Ulf Hanebutte, Marvell, Performance Architect, “Accelerating ML Anomaly Detection Models for Networking in the Datacenter”

2:30-3:00 – Break

3:00-3:30 – Dr. Harry Liu, Alibaba, Director of Network R&D, “The Hyper-Converged Programmable Gateway in Alibaba's Edge Cloud”

3:30-4:00 – Prof. Priyanka Raina, Stanford, “AHA: An Agile Approach to the Design of Coarse-Grained Reconfigurable Accelerators and Compilers”

4:00-4:15 – Best Poster Award & Reception

ABSTRACTS and BIOS (listed in order of appearance)

Dr. Partha Ranganathan, Google, VP and Engineering Fellow, "The Forecast: Cloudy with a Chance of ML"

Abstract: Growing volumes of data, smarter edge devices, and new, diverse workloads are causing demand for computing to grow at phenomenal rates. At the same time, Moore's law is slowing down, stressing traditional assumptions around cheaper and faster systems every year. How do you respond to the current opportunities, exponentially increasing compute capacity at a fixed cost? Specifically, we will discuss the innovations and trends shaping the future computing landscape -- more “out-of-the-box” designs that consider the entire datacenter as a computer for custom silicon and software-defined infrastructure, broader open innovation ecosystems, and a whole lot of machine learning (ML) --- all delivered in the cloud. We will conclude with a discussion of opportunities for future innovation and industry-academia partnership on systems for cloud computing.

Bio: Partha Ranganathan is currently a VP, technical Fellow at Google where he is the area technical lead for hardware and datacenters, designing systems at scale. Prior to this, he was a HP Fellow and Chief Technologist at Hewlett Packard Labs where he led their research on systems and data centers. Partha has worked on several interdisciplinary systems projects with broad impact on both academia and industry, including widely-used innovations in energy-aware user interfaces, heterogeneous multi-cores, power-efficient servers, accelerators, and disaggregated and data-centric data centers. He has published extensively (including being the co-author on the popular "Datacenter as a Computer" textbook), is a co-inventor on more than 100 patents, and has been recognized with numerous awards. He has been named a top-15 enterprise technology rock star by Business Insider, one of the top 35 young innovators in the world by MIT Tech Review, and is a recipient of the ACM SIGARCH Maurice Wilkes award, Rice University's Outstanding Young Engineering Alumni award, and the IIT Madras distinguished alumni award. He is also a Fellow of the IEEE and ACM, and is currently on the board of directors for OpenCompute.

Dr. Gilles Pokam, Intel, “Emerging Server Architecture Frontiers at Hyperscale”

Abstract: Today's hyperscale workloads, e.g., web services, are powered by modern data centers featuring 100s of thousands of servers. The compute demand of these data centers is expected to increase, essentially driven by growth in the user base and data, and increased SW complexity. These changes are already causing industry to rethink the architecture of the server that will power future hyperscale data centers. In this talk, we draw from frontiers in analyzing trends in hyperscale workloads to discuss some of the changes that's inspiring server architecture redesign.

Bio: Dr. Gilles Pokam is a Principal Engineer at Intel. Prior to joining Intel, Gilles was a researcher at IBM T.J. Watson Research Center in NY and a postdoc researcher at UCSD. His research focuses on microarchitecture and its interactions with Systems and Security. Dr. Gilles Pokam is currently leading work aiming at developing next generation frontend microarchitecture mechanisms to improve performance and security of emerging datacenter workloads. Dr. Gilles Pokam holds a PhD in Computer Science from INRIA (France) and has authored 40+ publications and 30+ patents in Architecture and Systems. He is the recipient of 2006 IEEE Top Pick Award and a member of MICRO Hall of Fames.

Dr. Niket Agarwal, Meta, "Large Scale Storage and Data Ingestion for ML Training"

Abstract: Meta's products like News Feed, Ads, IG Reels, language translation heavily rely on ML ranking and recommendation. Our ranking models consume massive datasets to continuously improve user experience on our platform. In this talk, we discuss our experience of building infrastructure to serve massive scale data to the 1000s of AI models driving our products. Further, we present AI training data pipeline workload characteristics and challenges in scaling these systems for industry-scale use cases as well as talk about HW/SW co-design optimizations we have done to these data ingestion pipelines. We will conclude with technical and research challenges in this space.

Bio: Niket Agarwal is a Principal Software Engineer in the Infrastructure team at Meta/Facebook. His interests and background include system architecture co-design across compute, storage and ML systems. Prior to Meta, Niket spent nearly a decade at Google building compute and storage systems that powered all of Google Infrastructure, including Google Cloud. Niket received his M.A and Ph.D. from Princeton University and BTech in Computer Science from Indian Institute of Technology Kharagpur.

Prof. Andrew Quinn, UCSC, “Data-Centric Debugging or: How I Learned to Stop Worrying and Use 'Big-Data' Techniques to Diagnose Software Bugs”

Abstract: Software bugs are pervasive and costly. As a result, developers spend the majority of their time debugging their software. Traditionally, debugging involves inspecting and tracking the runtime behavior of a program. Alas, program inspection is computationally expensive, especially when employing powerful techniques such as dynamic information flow tracking, data-race

detection, and data structure invariant checks. Moreover, to use current tools (e.g., gdb, Intel PIN), developers write complex debugging logic in a style that mirrors traditional software. So, debugging logic faces the same challenges as

traditional software, including concurrency, fault handling and dynamic memory. In general, specifying debugging logic can be as difficult as writing the program being debugged!

In this talk, I will describe a data-centric debugging framework that alleviates the performance and specification limitations of current debugging tools. The key idea is to treat debugging as a data-oriented task, in which debugging questions are conceptually expressed as queries over an execution of a program. I will discuss how this conceptual model enables massive-scale

parallelism to accelerate debugging (SledgeHammer OSDI’18), even those, such as information flow, that are "embarrassingly sequential" (JetStream OSDI’16). In addition, I will describe my recently published work on a relational model for decreasing the latency and complexity required when debugging our most pernicious software bugs (OmniTable OSDI’22).

Bio: Andrew Quinn is an assistant professor at the University of California, Santa Cruz. His research investigates systems, tools, techniques to make software more reliable. His dissertation focuses on solutions that help developers better understand their

software for tasks such as debugging, security forensics, and data provenance. In addition, he has recently been working on solutions to improve the reliability of applications that use emerging hardware, including persistent memory, heterogeneous systems, and edge computing, and new systems for production-grade deterministic record and replay.

Andrew graduated from the University of Michigan in 2021. While at Michigan, he received an NSF fellowship (2017) and a MSR fellowship (2017). Before Michigan, Andrew attended Dension University where received degrees in Computer Science and Mathematics. When not working, Andrew is likely wrestling with his dogs, enjoying a long run, or (a recent addition to the list) surfing.

Prof. Peter Alvaro, UCSC, “What not Where: Sharing in a World of Distributed, Persistent Memory”

Abstract: A world of distributed, persistent memory is on its way. Our programming models traditionally operate on short-lived data representations tied to ephemeral contexts such as processes or computers. In the limit, however, data lifetime is infinite compared to these transient actors. We discuss the implications for programming models raised by a world of large and potentially persistent distributed memories, including the need for explicit, context-free, invariant data references. We present a novel operating system that uses wisdom from both storage and distributed systems to center the programming model around data as the primary citizen, and reflect on the transformative potential of this change for infrastructure and applications of the future.

Bio: Peter Alvaro is an Associate Professor of Computer Science at the University of California Santa Cruz, where he leads the Disorderly Labs research group (disorderlylabs.github.io). His research focuses on using data-centric languages and analysis techniques to build and reason about data-intensive distributed systems, in order to make them scalable, predictable and robust to the failures and nondeterminism endemic to large-scale distribution. Peter earned his PhD at UC Berkeley, where he studied with Joseph M. Hellerstein. He is a recipient of the NSF CAREER Award, the Facebook Research Award, the USENIX ATC 2020 Best Presentation Award, the SIGMOD 2021 Distinguished PC Award, and the UCSC Excellence in Teaching Award.

Dr. Richard New, Vice President of Research, Western Digital, “Innovations in Storage Technology from Device Physics Through the Software Stack”

Abstract: Today's cloud infrastructure depends on an abundance of data stored primarily on HDDs and SSD, managed through layers of software within the framework of scaled-out distributed storage applications. While storage cost and performance have both scaled dramatically due to the introduction of new technologies at the device level, the basic protocols and mechanisms by which software talks to storage have been stable for decades. This talk will explore how recent changes in the physical storage media (HDDs and SSDs) are driving new storage interface protocols, and requiring changes in the storage stack up through to the software application layer for key software components in the cloud including file systems and databases. This talk will describe how two such technologies, Shingled Magnetic Recording (SMR) for HDDs and Zoned Namespace (ZNS) for SSDs, are driving drive new performance, reliability and cost efficiencies within the storage domain, but also require fundamental changes in the storage software layers. Finally, we'll look at where storage technologies are headed, and discuss briefly some further research opportunities for storage that we believe will be important in the next decade.

Bio: Richard New is the Vice President of Research at Western Digital where he oversees the company’s advanced research across a range of topics ranging from physical sciences to software architecture. Richard received a BS in Electrical Engineering from the University of Waterloo, and an MS and PhD in Electrical Engineering from Stanford University. Richard was born in Cambridge England and grew up in Waterloo Canada. He currently resides in Palo Alto with his family and enjoys working on robotics projects with his kids.

Prof. Heiner Litz, UCSC, “Unblocking Performance Bottlenecks in Data Center Workloads”

Abstract: Modern complex datacenter applications exhibit unique characteristics such as extensive data and instruction footprints, complex control flow, and hard-to-predict branches that are not adequately served by existing microprocessor architectures. In particular, these workloads exceed the capabilities of microprocessor structures such as the instruction cache, BTB, branch predictor, and data caches, causing significant degradation of performance and energy efficiency.

In my talk, I will provide a detailed characterization of datacenter applications, highlighting the importance of addressing frontend and backend performance issues. I will then introduce three new techniques to address these challenges, improving the branch predictor, data cache, and instruction scheduler. I will make the case for profile-guided optimizations that amortize overheads across the fleet and which have been successfully deployed at Google and Intel, serving millions of users daily.

Bio: Heiner Litz is Assistant Professor at the University of California, Santa Cruz working in the field of Computer Architecture and Systems. His research focuses on improving the performance, cost, and efficiency of data center systems. Heiner is the recipient of the NSF CAREER award, Intel's Outstanding Researcher award, Google's Faculty Award, and his work received the 2020 IEEE MICRO Top Pick award. Before joining UCSC, Heiner Litz was a researcher at Google and a postdoctoral research fellow at Stanford University with Prof. Christos Kozyrakis and David Cheriton. Dr. Litz received his Diplom and Ph.D. from the University of Mannheim, Germany, advised by Prof. Bruening.

Dr. Ulf Hanebutte, Marvell, “Accelerating ML Anomaly Detection Models for Networking in the Datacenter”

Abstract: AI-ML technologies are being widely deployed in a variety of applications but their use within networking itself has been quite limited. This talk will showcase a solution for high-rate anomaly detection based on a network providing smart statistics on a group of packets and a HW optimized autoencoder neural network that is derived from an ensemble of autoencoders.

Additionally, this talk will highlight the flexibility and performance of Marvell’s Machine Learning Inference Processor integrated within the upcoming OCTEON 10 DPU, which enables best-in-class inferencing directly in the data pipeline.

Bio: Dr. Ulf Hanebutte is a Sr. Principal Architect at Marvell with focus on HW/SW codesign for Machine Learning. In this role he has contributed to multiple generations of ML inference accelerator HW and their SW stacks. Collaborating and solving big problems together has marked his extensive career, both at the National Labs and in the private sector, with projects ranging from HPC at Exa-scale to IoT for energy efficient buildings. He holds a Ph.D. from Northwestern University and a Dipl. Ing. in Aero Space Engineering from the University of Stuttgart

Dr. Harry Liu, Alibaba, Director of Network R&D, “The Hyper-Converged Programmable Gateway in Alibaba's Edge Cloud”

Abstract: Edge cloud provides significant performance and cost advantages for emerging applications such as cloud gaming, video conferencing and AR/VR, etc. However, different from central clouds, edge cloud also faces tremendous challenges due to the limited resources, demands on high performance, and hardware heterogeneity. Alibaba solves these problems by introducing a hyper-converged gateway platform ”SNA” that provides the cloud network stack and network functions within the network rather than the hosts. SNA is a heterogeneous computing platform that merges network switching, network virtualization, and various network functions on top of programmable network ASICs, FPGAs, and CPUs. It has been deployed to support some multi-million-user products in Alibaba’s edge cloud. The key technical enabler of the rapid and safe deployment of the hyper-converged gateways running in SNA is our programmable network development platform “TaiX” which provides novel and practical programming abstractions, compilers, debuggers, testers, orchestrators, and operation tools.

Bio: Hongqiang "Harry" Liu is a Director of Network Research and Edge Network Infrastructure Engineering in Alibaba Cloud and Alibaba DAMO Academy. He received his Ph.D. degree from the Department of Computer Science at Yale University in 2014. His research focuses on data center networks, network transports, and programmable networks. He has published more than 20 papers in top-tier academic conferences, such as ACM SIGCOMM, ACM SOSP, and USENIX NSDI. He also serves on the technical program committees of SIGCOMM and NSDI. He is the recipient of the prestigious ACM SIGCOMM Doctoral Dissertation Award - Honorable Mention in 2015.

Prof. Priyanka Raina, Stanford, “AHA: An Agile Approach to the Design of Coarse-Grained Reconfigurable Accelerators and Compilers”

Abstract: With the slowing of Moore's law, computer architects have turned to domain-specific hardware specialization to continue improving the performance and efficiency of computing systems. However, specialization typically entails significant modifications to the software stack to properly leverage the updated hardware. The lack of a structured approach for updating both the compiler and the accelerator in tandem has impeded many attempts to systematize this procedure. We propose a new approach to enable flexible and evolvable domain-specific hardware specialization based on coarse-grained reconfigurable arrays (CGRAs). Our agile methodology employs a combination of new programming languages and formal methods to automatically generate the accelerator hardware and its compiler from a single source of truth. This enables the creation of design-space exploration frameworks that automatically generate accelerator architectures that approach the efficiencies of hand-designed accelerators, with a significantly lower design effort for both hardware and compiler generation. Our current system accelerates dense linear algebra applications, but is modular and can be extended to support other domains. Our methodology has the potential to significantly improve the productivity of hardware-software engineering teams and enable quicker customization and deployment of complex accelerator-rich computing systems.

Bio: Priyanka Raina is an Assistant Professor of Electrical Engineering at Stanford University. She received her B.Tech. in Electrical Engineering from IIT Delhi in 2011 and her S.M. and Ph.D. in Electrical Engineering and Computer Science from MIT in 2013 and 2018. Priyanka’s research is on creating high-performance and energy-efficient architectures for domain-specific hardware accelerators in existing and emerging technologies, and agile hardware-software co-design. Her research has won best paper awards at ESSCIRC and MICRO conferences and in the JSSC journal. She is also the recipient of Intel Rising Star Faculty Award, Hellman Faculty Scholar Award and is a Terman Faculty Fellow.

Below, attendees mingle during the Poster Session at the 2016 Stanford Workshop in Tresidder Memorial Union.

Dr. Amin Vahdat, Google Engineering Fellow and VP, presents “Cloud 3.0 and Software Defined Networking” to the audience during the morning technical session of the 2016 Stanford Workshop in Tresidder Memorial Union.

QUOTES FROM PREVIOUS CLOUD WORKSHOPS

Professor David Patterson, the Pardee Professor of Computer Science, UC Berkeley, “I saw strong participation at the Cloud Workshop, with some high energy and enthusiasm; and I was delighted to see industry engineers bring and describe actual hardware, representing some of the newest innovations in the data center.”

Professor Christos Kozyrakis, Professor of Electrical Engineering & Computer Science, Stanford University, “As a starting point, I think of these IAP workshops as ‘Hot Chips meets ISCA’, i.e., an intersection of industry’s newest solutions in hardware (Hot Chips) with academic research in computer architecture (ISCA); but more so, these workshops additionally cover new subsystems and applications, and in a smaller venue where it is easy to discuss ideas and cross-cutting approaches with colleagues.”

Professor Ken Birman, the N. Rama Rao Professor of Computer Science, Cornell University, “I actually thought it was a fantastic workshop, an unquestionable success, starting from the dinner the night before, through the workshop itself, to the post-event reception for the student Best Poster Awards.”

Professor Hakim Weatherspoon, Professor of Computer Science, Cornell University, “I have participated in three IAP Workshops since the very first one at Cornell and it is great to see that the IAP premise was a success now as it was then, bringing together industry and academia in a focused workshop and an all-day exchange of ideas. It was a fantastic experience and I look forward to the next IAP Workshop.”

Dr. Carole-Jean Wu, Research Scientist, AI Infrastructure, Facebook Research, and Professor of CSE, Arizona State University, “The IAP Cloud Computing workshop provides a great channel for valuable interactions between faculty/students and the industry participants. I truly enjoyed the venue learning about research problems and solutions that are of great interest to Facebook, as well as the new enabling technologies from the industry representatives. The smaller venue and the poster session fostered an interactive environment for in-depth discussions on the proposed research and approaches and sparked new collaborative opportunities. Thank you for organizing this wonderful event! It was very well run.”

Nathan Pemberton, PhD student, UC Berkeley, "IAP workshops provide a valuable chance to explore emerging research topics with a focused group of participants, and without all the time/effort of a full-scale conference. Instead of rushing from talk to talk, you can slow down and dive deep into a few topics with experts in the field."

Prof. Vishal Shrivastav, Purdue University, “Attending the IAP workshop was a great experience and very rewarding. I really enjoyed the many amazing talks from both the industry and academia. My personal conversations with several industry leaders at the workshop will definitely guide some of my future research."

Prof. Ana Klimovic, ETH Zurich, “I have attended three IAP workshops and I am consistently impressed by the quality of the talks and the breadth of the topics covered. These workshops bring top-tier industry and academia together to discuss cutting-edge research challenges. It is a great opportunity to exchange ideas and get inspiration for new research opportunities."

Dr. Pankaj Mehra, VP of Product Planning, Samsung, "Terrific job organizing the Workshop that gave all parties -- students, faculty, industry -- something worthwhile to take back."

Shay Gal-On, Principal Engineer, Marvell, “The IAP workshop was well worth the time spent, with high quality topics explored at a good depth, as well as an excellent candidate pool for internships. I will definitely come again.”

Dr. Richard New, VP Research, Western Digital, “IAP workshops provide a great opportunity to meet with professors and students working at the cutting edge of their fields. It was a pleasure to attend the event – lots of very interesting presentations and posters.”

Professor David Patterson, the Pardee Professor of Computer Science, UC Berkeley, “I saw strong participation at the Cloud Workshop, with some high energy and enthusiasm; and I was delighted to see industry engineers bring and describe actual hardware, representing some of the newest innovations in the data center.”

Professor Christos Kozyrakis, Professor of Electrical Engineering & Computer Science, Stanford University, “As a starting point, I think of these IAP workshops as ‘Hot Chips meets ISCA’, i.e., an intersection of industry’s newest solutions in hardware (Hot Chips) with academic research in computer architecture (ISCA); but more so, these workshops additionally cover new subsystems and applications, and in a smaller venue where it is easy to discuss ideas and cross-cutting approaches with colleagues.”

Professor Ken Birman, the N. Rama Rao Professor of Computer Science, Cornell University, “I actually thought it was a fantastic workshop, an unquestionable success, starting from the dinner the night before, through the workshop itself, to the post-event reception for the student Best Poster Awards.”

Professor Hakim Weatherspoon, Professor of Computer Science, Cornell University, “I have participated in three IAP Workshops since the very first one at Cornell and it is great to see that the IAP premise was a success now as it was then, bringing together industry and academia in a focused workshop and an all-day exchange of ideas. It was a fantastic experience and I look forward to the next IAP Workshop.”

Dr. Carole-Jean Wu, Research Scientist, AI Infrastructure, Facebook Research, and Professor of CSE, Arizona State University, “The IAP Cloud Computing workshop provides a great channel for valuable interactions between faculty/students and the industry participants. I truly enjoyed the venue learning about research problems and solutions that are of great interest to Facebook, as well as the new enabling technologies from the industry representatives. The smaller venue and the poster session fostered an interactive environment for in-depth discussions on the proposed research and approaches and sparked new collaborative opportunities. Thank you for organizing this wonderful event! It was very well run.”

Nathan Pemberton, PhD student, UC Berkeley, "IAP workshops provide a valuable chance to explore emerging research topics with a focused group of participants, and without all the time/effort of a full-scale conference. Instead of rushing from talk to talk, you can slow down and dive deep into a few topics with experts in the field."

Prof. Vishal Shrivastav, Purdue University, “Attending the IAP workshop was a great experience and very rewarding. I really enjoyed the many amazing talks from both the industry and academia. My personal conversations with several industry leaders at the workshop will definitely guide some of my future research."

Prof. Ana Klimovic, ETH Zurich, “I have attended three IAP workshops and I am consistently impressed by the quality of the talks and the breadth of the topics covered. These workshops bring top-tier industry and academia together to discuss cutting-edge research challenges. It is a great opportunity to exchange ideas and get inspiration for new research opportunities."

Dr. Pankaj Mehra, VP of Product Planning, Samsung, "Terrific job organizing the Workshop that gave all parties -- students, faculty, industry -- something worthwhile to take back."

Shay Gal-On, Principal Engineer, Marvell, “The IAP workshop was well worth the time spent, with high quality topics explored at a good depth, as well as an excellent candidate pool for internships. I will definitely come again.”

Dr. Richard New, VP Research, Western Digital, “IAP workshops provide a great opportunity to meet with professors and students working at the cutting edge of their fields. It was a pleasure to attend the event – lots of very interesting presentations and posters.”