Webinar Series: Topics in AI

|

Friday, December 10, 2021, 11am-12pm PT

Dr. Neeraja Yadwadkar, VMware Research (Joined UT Austin ECE Faculty in 2022)

BEST PAPER AWARD

2021 USENIX Annual Technical Conference INFaaS: Automated Model-less Inference Serving Francisco Romero, Qian Li, Neeraja J. Yadwadkar, and Christos Kozyrakis, Stanford University Webinar Video Neeraja conducted this research while a post-doc with Prof. Christos Kozyrakis at Stanford, one of our IAP Advisors. Abstract: Despite existing work in machine learning inference serving, ease-of-use and cost efficiency remain challenges at large scales. Developers must manually search through thousands of model-variants – versions of already-trained models that differ in hardware, resource footprints, latencies, costs, and accuracies – to meet the diverse application requirements. Since requirements, query load, and applications themselves evolve over time, these decisions need to be made dynamically for each inference query to avoid excessive costs through naive autoscaling. To avoid navigating through the large and complex trade-off space of model-variants, developers often fix a variant across queries, and replicate it when load increases. However, given the diversity across variants and hardware platforms in the cloud, a lack of understanding of the trade-off space can incur significant costs to developers. In this talk, I will make a case for inference serving to be model-less. Further, I will talk about INFaaS, an automated model-less system for distributed inference serving, where developers simply specify the performance and accuracy requirements for their applications without needing to specify a specific model-variant for each query. INFaaS generates model-variants from already trained models, and efficiently navigates the large trade-off space of model-variants on behalf of developers to meet application-specific objectives: (a) for each query, it selects a model, hardware architecture, and model optimizations, (b) it combines VM-level horizontal autoscaling with model-level autoscaling, where multiple, different model-variants are used to serve queries within each machine. I will discuss the effectiveness of INFaaS in improving throughput, latency, and meeting user-specified service level objectives. I will conclude the talk with potential next steps in the direction of model-less inference serving. Bio: Neeraja Yadwadkar will be joining the department of ECE at UT Austin, in Fall 2022, as an assistant professor. She is currently a post-doctoral researcher at VMware Research. She is a Cloud Computing Systems researcher, with a strong background in Machine Learning (ML). Most of Neeraja's research straddles the boundaries of systems, and Machine Learning (ML). Advances in Systems, Machine Learning (ML), and hardware architectures are about to launch a new era in which we can use the entire cloud as a computer. New ML techniques are being developed for solving complex resource management problems in systems. Similarly, systems research is getting influenced by properties of emerging ML algorithms, and evolving hardware architectures. Bridging these complementary fields, Neeraja's research focuses on using and developing ML techniques for systems, and building systems for ML. Neeraja was a postdoc at Stanford University working with Christos Kozyrakis. She graduated with a PhD in Computer Science from the RISE Lab at University of California, Berkeley, working with Randy Katz and Joseph Gonzalez. Before starting her PhD, she received her masters in Computer Science from the Indian Institute of Science, Bangalore, India, and her bachelors from the Government College of Engineering, Pune. Thursday, November 18, 2021, 11am-12pm PT

Prof. Yang Liu, UC Santa Cruz

Learning from Weak Supervisions: the Knowledge of Noise, Loss Corrections, and New Challenges

Webinar Video Dr. Liu received two Best Paper Awards in 2021, and was awarded a $1M research grant from NSF for Fairness in Machine Learning. Abstract: Learning from weak supervisions is a prevalent challenge in machine learning: in supervised learning, the training labels are often solicited from human annotators, which encode human-level mistakes; in semi-supervised learning, the artificially supervised pseudo labels are immediately imperfect; in reinforcement learning, the collected rewards can be misleading, due to faulty sensors. The list goes on. In this talk, I will first introduce our group’s recent works on tackling two classical challenges in weakly supervised learning: 1) estimating the noise rates in the weak supervisions, and 2) designing robust loss functions. I will explain both the theoretical and empirical advantages of our approaches. Then I will present a result that reveals the “Matthew effect” of most of the existing solutions. This observation cautions the use of these tools and provides a new evaluation criterion for future developments. Bio: Dr. Yang Liu is currently an Assistant Professor of Computer Science and Engineering at UC Santa Cruz. He was previously a postdoctoral fellow at Harvard University. He obtained his PhD degree from the Department of EECS, University of Michigan, Ann Arbor in 2015. He is interested in crowdsourcing and algorithmic fairness, both in the context of machine learning. His works have seen applications in high-profile projects, such as the Hybrid Forecasting Competition organized by IARPA, and Systematizing Confidence in Open Research and Evidence (SCORE) organized by DARPA. His works have also been covered by WIRED and WSJ. His works have won three best paper awards. Thursday, August 12, 2021, 11am-12pm PT

Prof. Vijay Janapa Reddi, Harvard

TinyMLPerf: Benchmarking Ultra-low Power Machine Learning Systems

Webinar Video Abstract: Tiny machine learning (ML) is poised to drive enormous growth within the IoT hardware and software industry. Measuring the performance of these rapidly proliferating systems, and comparing them in a meaningful way presents a considerable challenge; the complexity and dynamicity of the field obscure the measurement of progress and make embedded ML application and system design and deployment intractable. To foster more systematic development, while enabling innovation, a fair, replicable, and robust method of evaluating tinyML systems is required. A reliable and widely accepted tinyML benchmark is needed. To fulfill this need, tinyMLPerf is a community-driven effort to extend the scope of the existing MLPerf benchmark suite (mlperf.org) to include tinyML systems. With the broad support of over 75 member organizations, the tinyMLPerf group has begun the process of creating a benchmarking suite for tinyML systems. The talk presents the goals, objectives, and lessons learned (thus far), and welcomes others to join and contribute to tinyMLPerf. Bio: Prof. Janapa Reddi is an Associate Professor in John A. Paulson School of Engineering and Applied Sciences at Harvard University. Prior to joining Harvard, he was an Associate Professor at The University of Texas at Austin in the Department of Electrical and Computer Engineering. He is a founding member of MLCommons, a non-profit organization focused on accelerating AI innovation, and serves on the MLCommons Board of Directors. He is a Co-Chair of MLPerf Inference that is responsible for fair and useful benchmarks for measuring training and inference performance of ML hardware, software, and services. He works closely with the industry. He spent his academic sabbatical at Google from 2017 to early 2019 and over the years he has consulted for other companies such as Facebook, Intel and AMD. His primary research interests include computer architecture and system-software design to enable mobile computing and autonomus machines. His secondary research interests include building high-performance, energy-efficient and resilient computer systems. Dr. Janapa Reddi is a recipient of multiple honors and awards, including the National Academy of Engineering (NAE) Gilbreth Lecturer Honor (2016), IEEE TCCA Young Computer Architect Award (2016), Intel Early Career Award (2013), Google Faculty Research Awards (2012, 2013, 2015, 2017, 2020), Best Paper at the 2005 International Symposium on Microarchitecture (MICRO), Best Paper at the 2009 International Symposium on High Performance Computer Architecture (HPCA), MICRO and HPCA Hall of Fame (2018 and 2019, respectively), and IEEE’s Top Picks in Computer Architecture awards (2006, 2010, 2011, 2016, 2017). Beyond his technical research contributions, Dr. Janapa Reddi is passionate about STEM education. He is responsible for the Austin Independent School District’s “hands-on” computer science (HaCS) program, which teaches sixth- and 7th-grade students programming and the general principles that govern a computing system using open-source electronic prototyping platforms. He received a B.S. in computer engineering from Santa Clara University, an M.S. in electrical and computer engineering from the University of Colorado at Boulder, and a Ph.D. in computer science from Harvard University. Thursday, May 13, 2021, 11am-12pm PT

Prof. Manya Ghobadi, MIT

Optimizing AI Systems with Optical Technologies

Webinar Video Abstract: Our society is rapidly becoming reliant on deep neural networks (DNNs). New datasets and models are invented frequently, increasing the memory and computational requirements for training. The explosive growth has created an urgent demand for efficient distributed DNN training systems. In this talk, I will discuss the challenges and opportunities for building next-generation DNN training clusters. In particular, I will propose optical network interconnects as a key enabler for building high-bandwidth ML training clusters with strong scaling properties. Our design enables accelerating the training time of popular DNN models using reconfigurable topologies by partitioning the training job across GPUs with hybrid data and model parallelism while ensuring the communication pattern can be supported efficiently on an optical interconnect. Our results show that compared to similar-cost interconnects, we can improve the training iteration time by up to 5x. Bio: Manya Ghobadi is an assistant professor at the EECS department at MIT. Before MIT, she was a researcher at Microsoft Research and a software engineer at Google Platforms. Manya is a computer systems researcher with a networking focus and has worked on a broad set of topics, including data center networking, optical networks, transport protocols, and network measurement. Her work has won the best dataset award and best paper award at the ACM Internet Measurement Conference (IMC) as well as Google research excellent paper award. Thursday, January 28, 2021, 11am-12pm PT

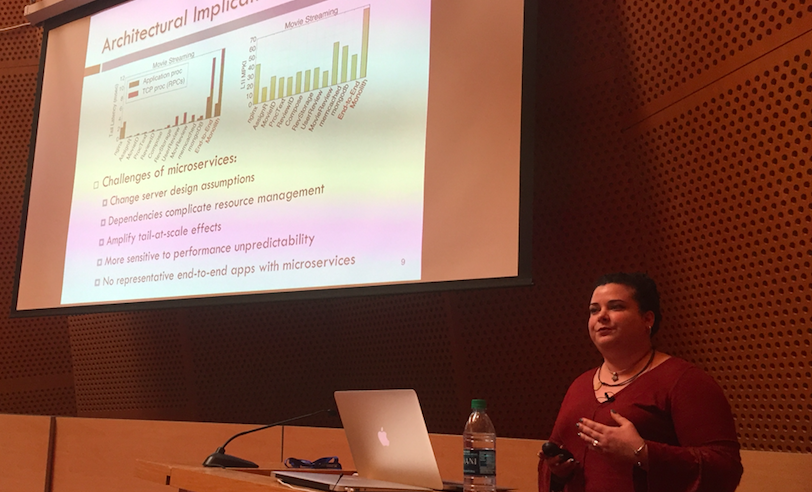

Prof. Christina Delimitrou, Cornell

Leveraging ML to Handle the Increasing Complexity of the Cloud Webinar Video

Christina has received numerous awards for her research at Stanford and Cornell, most recently the 2020 TCCA Young Computer Architect Award. Abstract: Cloud services are increasingly adopting new programming models, such as microservices and serverless compute. While these frameworks offer several advantages, such as better modularity, ease of maintenance and deployment, they also introduce new hardware and software challenges. In this talk, I will briefly discuss the challenges that these new cloud models introduce in hardware and software, and present some of of our work on employing ML to improve the cloud’s performance predictability and resource efficiency. I will first discuss Seer, a performance debugging system that identifies root causes of unpredictable performance in multi-tier interactive microservices, and Sage, which improves on Seer by taking a completely unsupervised learning approach to data-driven performance debugging, making it both practical and scalable. Bio: Christina Delimitrou is an Assistant Professor and the John and Norma Balen Sesquicentennial Faculty Fellow at Cornell University, where she works on computer architecture and computer systems. She specifically focuses on improving the performance predictability and resource efficiency of large-scale cloud infrastructures by revisiting the way these systems are designed and managed. Christina is the recipient of the 2020 TCCA Young Computer Architect Award, an Intel Rising Star Award, a Microsoft Research Faculty Fellowship, an NSF CAREER Award, a Sloan Research Scholarship, two Google Research Award, and a Facebook Faculty Research Award. Her work has also received 4 IEEE Micro Top Picks awards and several best paper awards. Before joining Cornell, Christina received her PhD from Stanford University. She had previously earned an MS also from Stanford, and a diploma in Electrical and Computer Engineering from the National Technical University of Athens. More information can be found at: http://www.csl.cornell.edu/~delimitrou/ Below, Christina presents at the 2018 MIT Cloud Workshop. |

Thursday, March 25, 2021, 11am-12pm PT

Prof. Ana Klimovic, ETH Zurich

Ingesting and Processing Data Efficiently for Machine Learning

Webinar Video Abstract: Machine learning applications have sparked the development of specialized software frameworksand hardware accelerators. Yet, in today’s machine learning ecosystem, one important part of the system stack has received far less attention and specialization for ML: how we store and preprocess training data. This talk will describe the key challenges for implementing high-performance ML input data processing pipelines. We analyze millions of ML jobs running in Google's fleet and find that input pipeline performance significantly impacts end-to-end training performance and resource consumption. Our study shows that ingesting and preprocessing data on-the-fly during training consumes 30% of end-to-end training time, on average. Our characterization of input data pipelines motivates several systems research directions, such as disaggregating input data processing from model training and caching commonly reoccurring input data computation subgraphs. We present the multi-tenant input data processing service that we are building at ETH Zurich, in collaboration with Google, to improve ML training performance and resource usage. Bio: Ana Klimovic is an Assistant Professor in the Systems Group of the Computer Science Department at ETH Zurich. Her research interests span operating systems, computer architecture, and their intersection with machine learning. Ana's work focuses on computer system design for large-scale applications such as cloud computing services, data analytics, and machine learning. Before joining ETH in August 2020, Ana was a Research Scientist at Google Brain and completed her Ph.D. in Electrical Engineering at Stanford University in 2019. Her dissertation research was on the design and implementation of fast, elastic storage for cloud computing. Below, Ana receives the Best Poster Award at the 2018 Stanford-UCSC Workshop. Thursday, November 19, 2020, 11am-12pm PT

|